Animator Wen Zhang on Connecting Music and Motion and Building Software from Scratch

Animator Wen Zhang on Connecting Music and Motion and Building Software from Scratch

This is a guest post by Wen Zhang, one of 6 animators participating in this year’s Hothouse program, the NFB’s 12-week paid apprenticeship for emerging filmmakers.

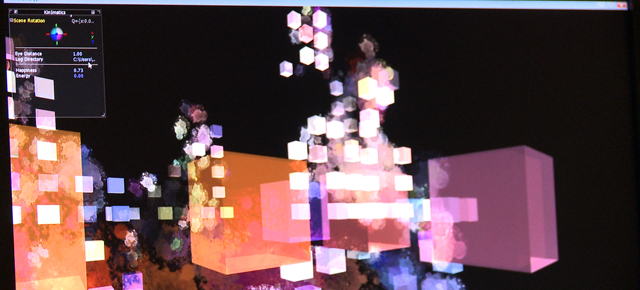

On paper, my job is “filmmaker”, but now, two-thirds of the way through Hothouse 8, I like to think that we are entering a new territory and redefining what that word encompasses. The project (working title: “dream.exe”) is an experiment to combine Kinect motion capture, music, and generative visuals into something compelling, and the fun comes from wearing a variety of hats to put it together.

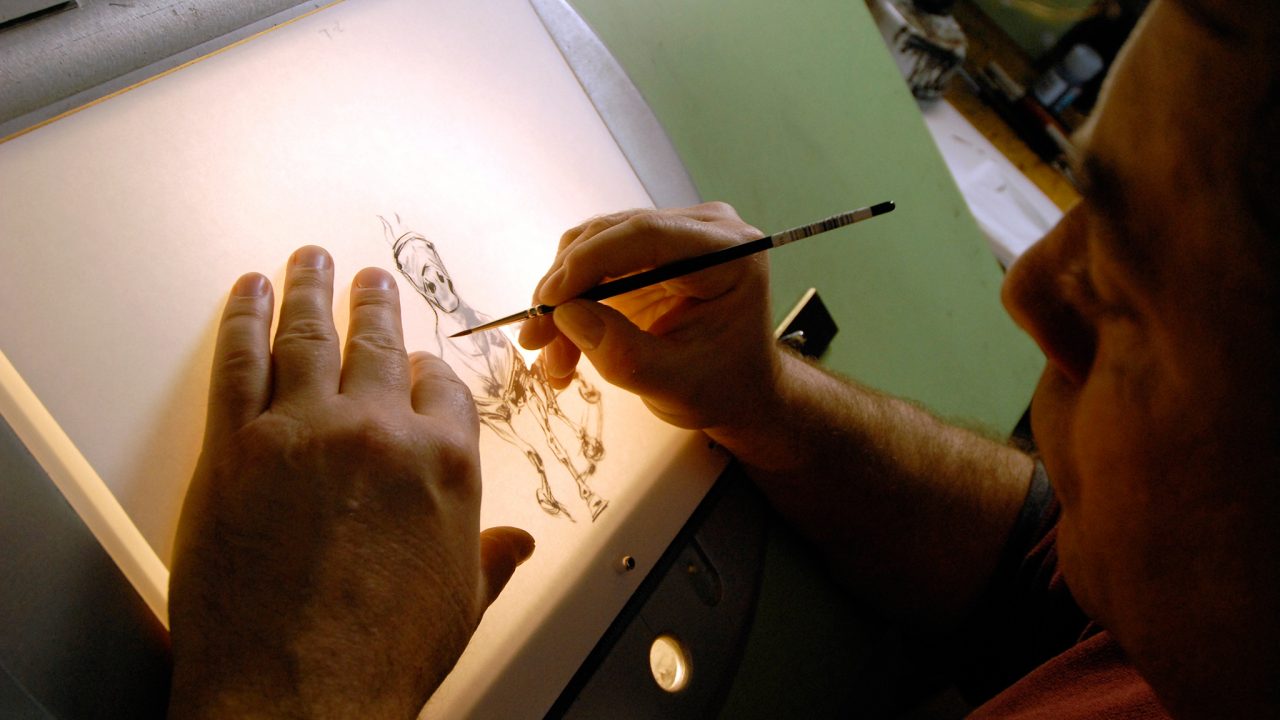

One of the odd things that you’ll notice if you pass by my desk often (and a lot of people do) is that I’m almost always staring at C++ code. Conceived as distinct film (linear) and interactive experiences, I am building my own software from the ground up to fulfill this vision, and existing 3D programs like Maya or game engines aren’t going to cut it.

First of all, the interactive version needs to run real-time, so Maya is out. Secondly, I have my own strange ideas for non-photorealistic representations of 3D “people clouds”, and game engines aren’t really designed for that sort of thing. A constant challenge is balancing visual sophistication with the performance requirements. It’s like translating images in my mind’s eye into code, and it’s ironic that I have to think in cold logic to portray the organic and lively subconsciousness of a (fictional) computer.

On the other (and equally important) half the equation, I am working with the composer/sound designer Luigi Allemano, classical composer Ana Sokolovic, and UQAM intern Louis Gingras to create a layered musical soundscape that forms the living heartbeat of the piece. What’s interesting is that, in the interactive version, the musical elements are shaped by the viewer’s movements, which also play into the way the visuals look and feel.

At the end of the day, we have a bunch of elements–motion capture data, music, algorithms, and even some live action footage–that all need to get mixed together to make coherent whole. It’s often easy to lose sight of the overall vision, and sometimes it’s hard to know what will work unless we try it and see if it sets like good mayonnaise (as our dear producer Jelena often tells me). Whatever it is, it’s going to be awesome.

-

Pingback: Watch 6 Short Films from the 8th Edition of Hothouse | NFB.ca blog