First-Ever Experimental Lab NFB/ONFxp Concludes with Inspiring Lightning Talks

First-Ever Experimental Lab NFB/ONFxp Concludes with Inspiring Lightning Talks

Having launched at the end of February, the NFB/ONFxp experimental lab provided four innovative artists the opportunity to pursue their wildest ideas, push the boundaries of their imagination, and connect with like-minded and experienced professionals in related industries.

The program, spearheaded by the English Animation Studio, supported them by pairing them up with a mentor to help guide their process and providing access to all the equipment and resources necessary to carry out their experiment. On April 10, after just six weeks of trials and tribulations, these four ambitious creators unveiled their experiments and shared what transpired with a full house at GamePlay Space—only a stone’s throw from the NFB’s soon-to-be headquarters in downtown Montreal.

Shawn Laptiste

Shawn’s initial proposal for Cellular Automata involved creating a type of augmented reality (AR) app that will allow cell phones to act as one pixel among hundreds of others, resulting in a massive pop-up art installation. Hoping to create a new medium that artists can use to exhibit their work, Shawn began exploring the most logical way to get the tool onto people’s devices. Shawn’s project has undergone a significant change since first pitching the idea. During a meeting with Frank Nadeau (Engineer, Development and Mediaat the NFB), Shawn had an epiphany that completely altered the course of his project:

“He suggested that I use a camera to view the cell phones and have that video stream drive what is displayed on the screens of the cell phones. This change would result in transforming the development away from triangulation and positioning to computer vision.” – Shawn Laptiste

This new direction would allow the project to be displayed without requiring the crowd to download an application onto their phones—the complications of that being that it would take time, people would likely be using their data to download, and permissions would have to be granted. This would likely turn eager audience members away.

Approaching the experiment from this new angle, Shawn then moved onto perfecting his genetic algorithm, which helps each phone determine which of the colourful pixels they will display. He’s also worked on narrowing down the best way to have phones receive and refresh the visual data. And what about a real-life situation, where the crowd can grow or shrink as audience members leave and new audience members join? If the number of phones displaying the artwork changes at any time, Shawn’s program will be able to adapt by refreshing and adjusting the image size to fit the quantity and location of devices detected.

“The approach involves having the camera and the phones act as a feedback loop where the camera reads what is displayed by the phones and the system attempts to create the best image that it can by adjusting frame by frame to the input.“ – Shawn Laptiste

By the end of the lab, Shawn’s remaining time was focused on speeding up the processing through optimization and finalizing the web app that would communicate with the server. While he admitted there are still many kinks to smooth out, Shawn ended his talk with a bang as he ran a demo with the audience to test out the early prototype. With a little teamwork, the eager crowd was able to create a small sea of colour, cell phones held high.

You can follow Shawn across Instagram, Twitter, GitHub, itch.io: @lazerfalcon

Kofi Oduro

Kofi’s VR experience Diluted: Am I In Control? aims to evoke the feeling of being lost, using artificial intelligence to play with the senses. Trapped in an abstract space with no obvious goal, the player must decide what to do next—will they immediately attempt an escape, or will they simply explore? The AI will adapt the experience to player choices.

“During my first meeting with [Stéphanie Bouchard, Founder & CEO of Stockholm Syndrome.AI], we made tweaks to what kind of interactions would be instigated by the world and which ones would be instigated by the player. I’m also hoping to dig deeper into the aesthetics and see what type of scripts and algorithms I want to use to build this immersive world.” – Kofi Oduro

Kofi worked with Stéphanie to establish a clearer narrative and user journey for the experience he wanted to create. Kofi had a wealth of ideas coming into the lab, and the first challenge was to decide which of them would have the most impact on the experience and player. Through extensive storyboarding and map-building, they were able to determine what the player would see, what type of objects they’d encounter and what sort of events would trigger when interacting with them. Having fleshed out the player experience, he was then able to explore and determine which tools would best benefit his process; he went with Unity (a cross-platform real-time game engine) and Bolt (a visual scripting tool for Unity by Montreal-based Ludiq). Kofi is still working towards a branching narrative that will ensure each player has a unique experience, but our audience on Wednesday night was delighted to get a taste of the project with a trippy VR demo he put together for us!

You can visit Kofi’s website here.

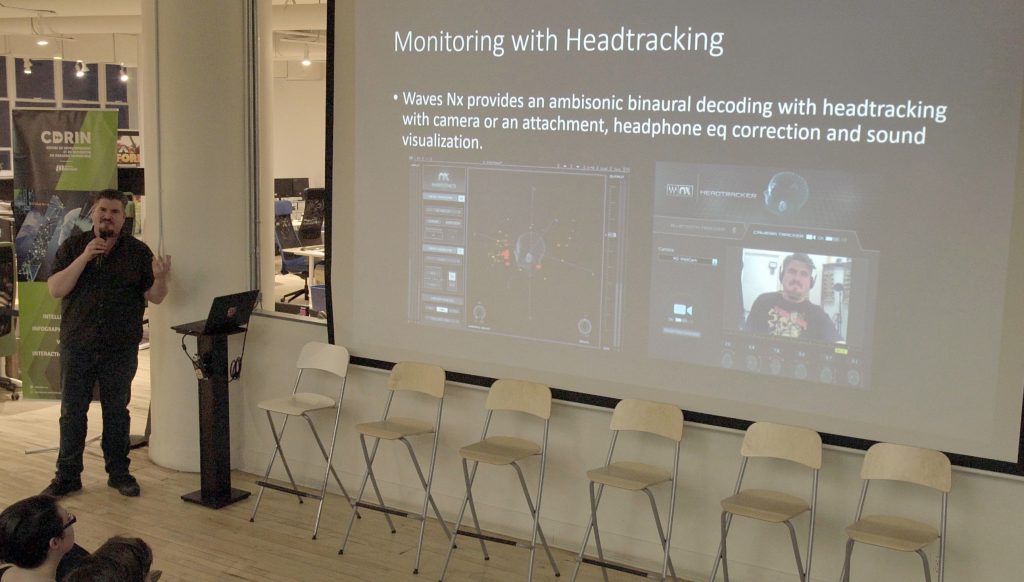

Travis Mercredi

Travis initially proposed the idea of creating a reactive spatial soundscape with an improved sense of immersion, using audio and video footage recorded in the Northwest Territories. His main intent was to learn; coming from a traditional background in sound design, he was really interested in expanding his knowledge and skills into 360-video and virtual reality (VR). He began by experimenting and researching the workflow and pipeline of spatial audio. From the start, Travis’s main struggle was finding the right software to fit his needs and work out the most effective process and workflow:

“Wanting to move in a new direction is what brought me here. I had a background in sound design in post-production but what intrigued me were these things that weren’t available at the time. I feel like an interloper in some ways. This experience with the NFB is going into me, and back up to the North—I’m like a conduit.” – Travis Mercredi

Travis’s goal with his project was to explore the boundaries of the 360-video experience, to merge the practical workflow that he picked up from his work in post-production, and to allow himself to get creative in a medium with which he’s less familiar.

“In some ways, I was hoping for a preferable platform that lived up to all of my considerations, but I realized we are not there yet in terms of available software options. They are all targeted at different areas and capacities, some being more practical than others for a post-production video workflow.” – Travis Mercredi

Travis ultimately ended up using Reaper (a digital audio workstation and MIDI sequencer software created by Cockos), and highly recommended it to anyone interested in working with audio. Using Ambisonics—a sphere-like device that picks up surround sound—Travis was able to record the audio of his chosen footage and merge it with his 360 footage to create an immersive experience. After the presentations finished, attendees were treated to a “first glimpse” of his soundscape!

You can visit Travis’s website here.

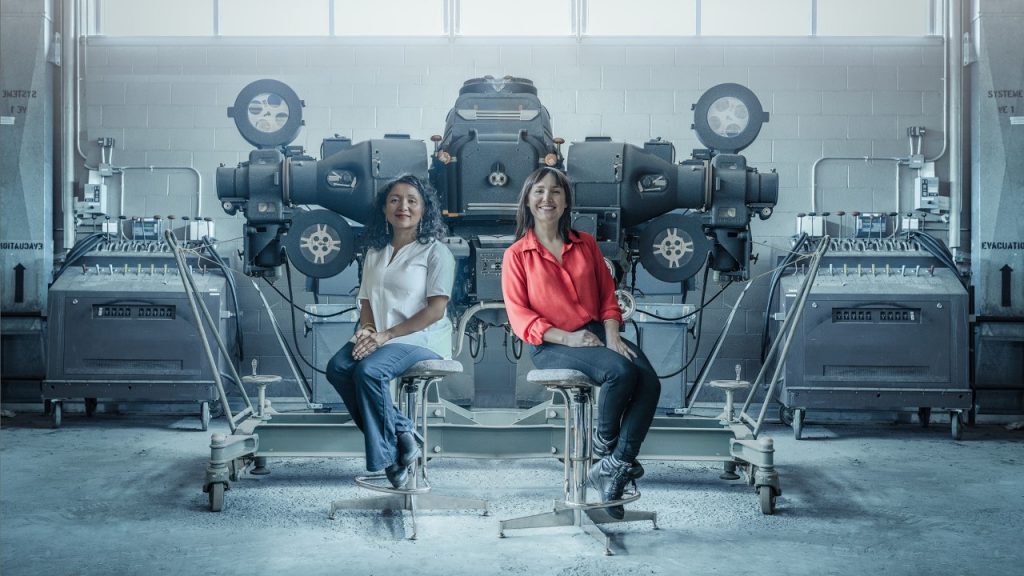

e→d films

In late March, e→d films held a special beta test at NFB headquarters for their newest plugin, 2D to 3D, which facilitates working between Photoshop and 2D software in a new and exciting way. The tool was designed primarily for artists who are unfamiliar with 3D or unsatisfied with the current pipeline. NFB/ONFxp provided a space, the necessary hardware, and six artists eager to test the new tool. Dan Gies led the workshop—which helped our testers become familiar with the new plugin—and helpers David Barlow-Krelina and Roxann Veaudry-Read circulated to answer questions and troubleshoot any issues. Once the six testers understood what the tool could do, they set off to bring their creations to life.

“What we wanted to look at was: How can we take the best of 3D, the best of 2D and mash them together to get rid of the really complex learning process around 3D? 3D is really daunting—so we had an idea. Let’s just take Photoshop and add some 3D glasses! Maybe instead of going all the way 3D, we can go a little 3D and see what happens.” – Dan Gies, Art Director at e→d films

Once the three-day beta test was complete, participants shared their work with the group and expressed their final thoughts.

“[We came to the NFB] to do this workshop to see: Was the process working? What could artists get out of it, what were the problems that they were running into? And because of this, we were able to see their workflow in real time and see how they would approach different tools.” – Dan Gies

The workshop was not only a success in providing extremely valuable feedback to e→d films, but it also inspired the participating artists to push their work further and in new ways.

You can find e→d films at their website, here, and on social media at @e.d.films.

Would you like to beta-test e→d films’ new plugin? Reach out to them by e-mail: info@edfilms.net

During the event’s Q&A / discussion period, the first question was: “What’s next”? All of the creators expressed passion for their respective projects and said they’ll continue to push them further. NFB/ONFxp organizers hope that all who attended (or caught the event on Facebook Live) felt inspired, and that they left daydreaming about their own creative endeavours that have yet to be explored. To learn more about this experimental lab and its first-ever participants, check out the first blog post on NFB/ONFxp here.